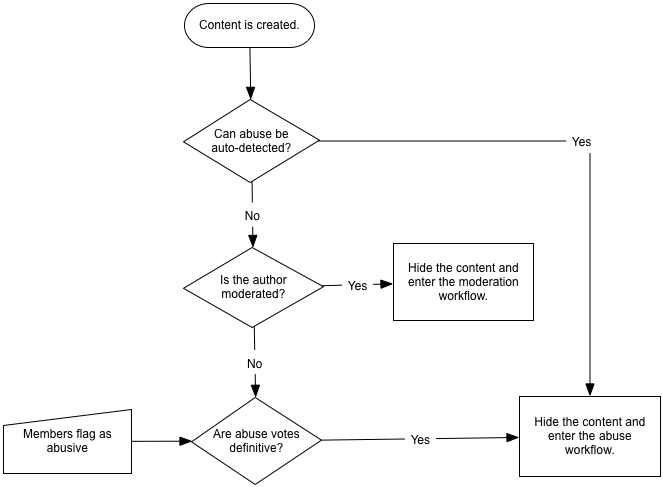

Moderation, SPAM prevention, and abuse are all part of a workflow to prevent inappropriate content from being shown in the community. In general, the content creation workflow is:

When content is created or edited, it is reviewed by automated abuse detection rules. If the rules find the content to be abusive (SPAM, for example), the content is immediately flagged as abusive, hidden from the community, and the content enters the abuse workflow.

If the automatic abuse detectors didn't identify the content as abusive/SPAM, the author is reviewed to detect if they should be moderated. Authors may have their account set to moderate all of their content or the application into which they created content may identify that all content should be moderated within it. If the content from the author should be moderated, it will enter the moderation workflow.

If the content is not moderated and not automatically detected as abusive, it is visible in the community. Members may view it and consider it inappropriate or abusive. They can then flag the content as abusive. If enough votes are received with respect to the author's reputation, the content is hidden from the community and the content enters the abuse workflow.

[toc]

Moderation Workflow

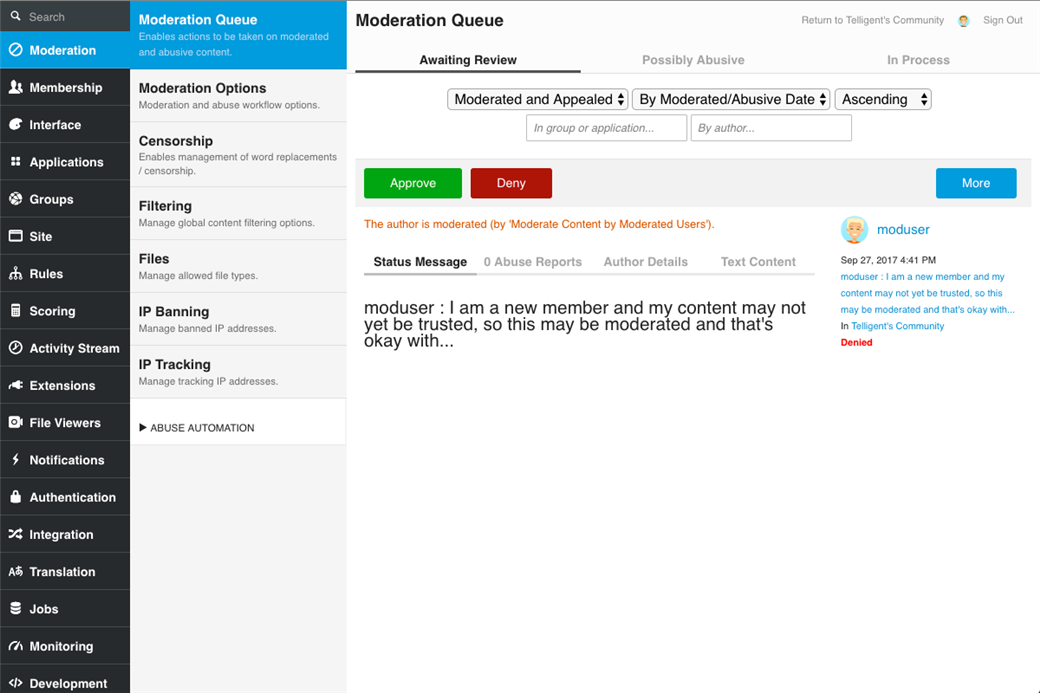

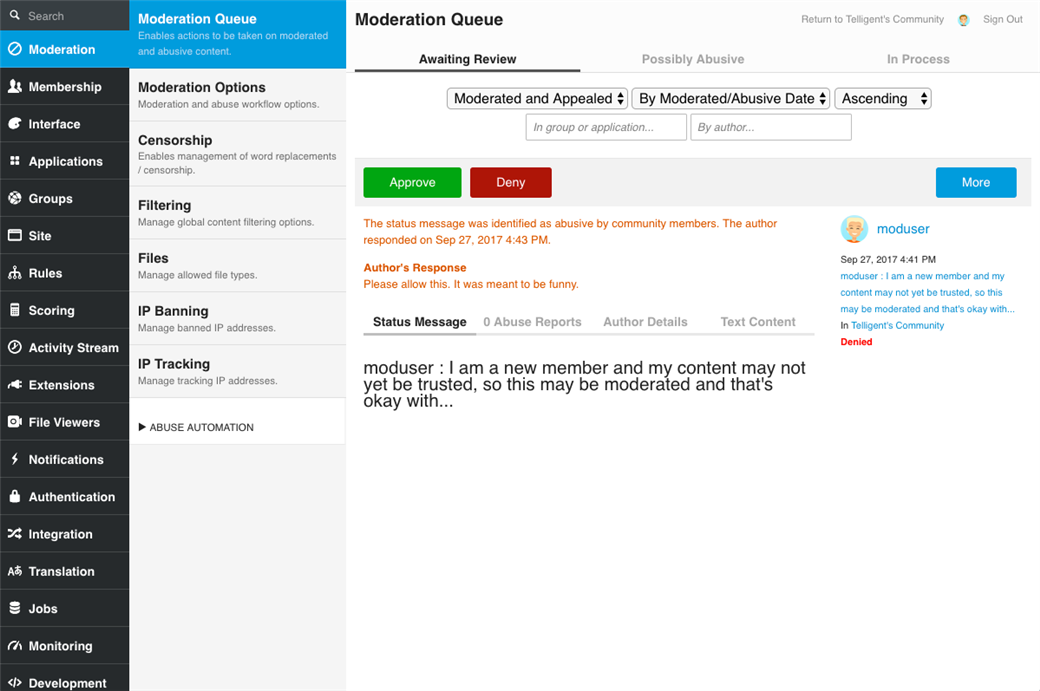

When content enters the moderation workflow, it is listed within the Administration > Moderation > Moderation Queue on the Awaiting Review tab:

The process for reviewing moderated content is:

The moderator will be notified that there is content to review. When they review content in the Moderation Queue, they can approve or deny the content. If the content is approved, it is immediately shown in the community and the author is notified that their content is available.

If the moderator considers the content abusive and denies the moderated content, the content enters the abuse workflow.

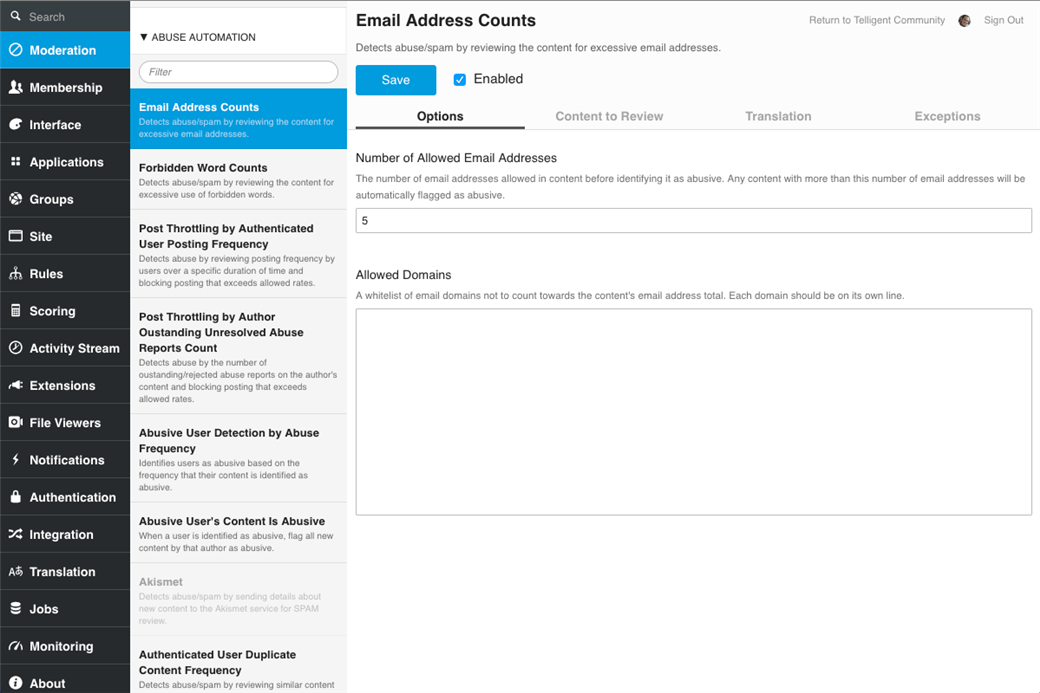

Automatic Abuse Detection

When content is created or edited, it is evaluated using automatic abuse detectors which are configurable rules that can review details of the content and its author to determine if it is likely abusive. Automatic abuse detection rules are listed in Administration > Moderation > ABUSE AUTOMATION:

Each automation rule can be enabled/disabled and configured to meet the needs of the community. Most automation rules enable specifying which types of content they review via their Content to Review tab.

If any abuse automation rule considers newly created or edited content as abusive, it immediately enters the abuse workflow.

Manual Abuse Detection

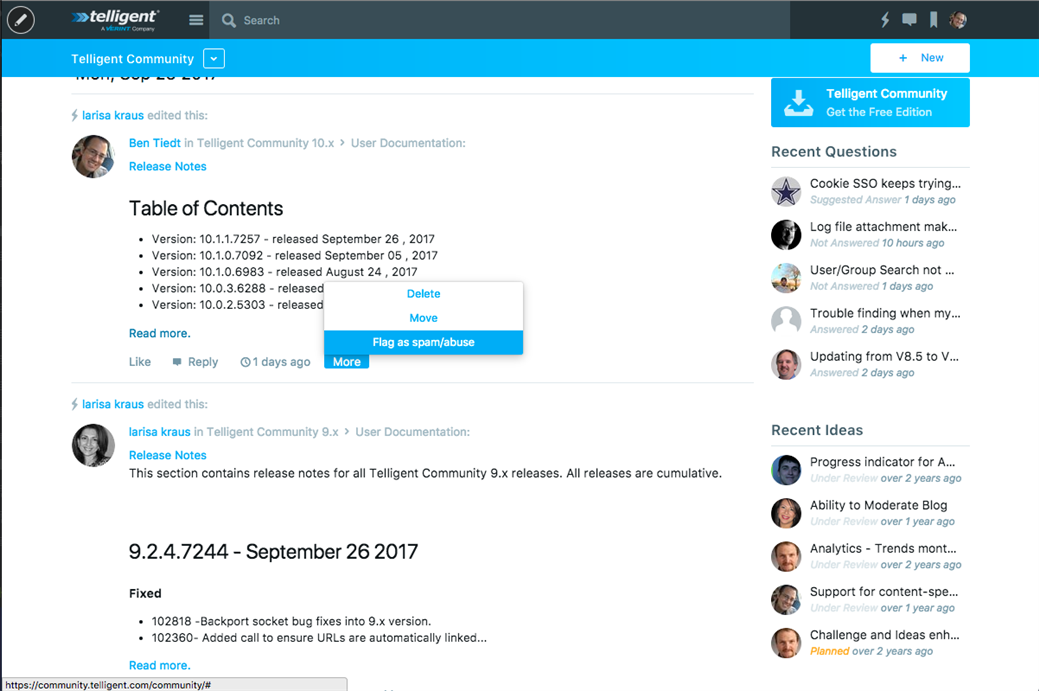

If content is not moderated or automatically determined to be abusive, it is published to the community. At that time, members can view the content. If members consider the content to be inappropriate or abusive, they can flag the content as abusive:

The flagging as abuse option is generally shown within the More menu associated to the content. Each member can flag a piece of content once.

If a moderator flags the content as abusive, it immediately enters the abuse workflow. Otherwise, if non-moderators flag the content, the reputation of the members flagging the content, weighted by the number of votes is compared to the reputation of the author of the content. If the votes outweigh the reputation of the author, the content will enter the abuse workflow.

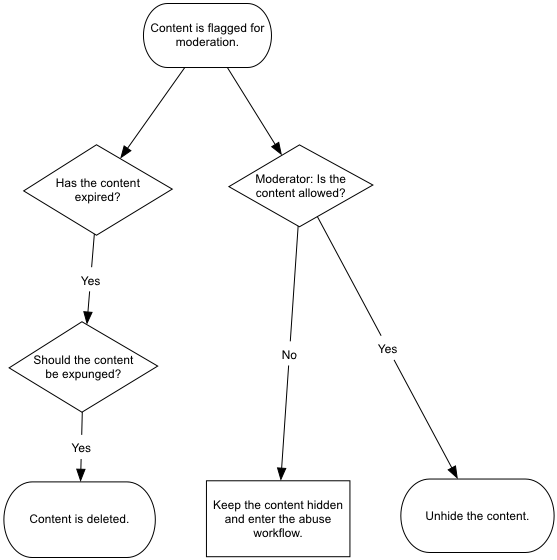

Abuse Workflow

All content that enters the abuse workflow is hidden, except members. If a member is flagged as abusive, they are still visible within the community, but the Abusive User's Content Is Abusive automation rule will ensure that any content they create will immediately enter the abuse workflow. This automation rule is not retroactive, it will only review content created after the member has been flagged. Abusive content is shown within the Moderation Queue:

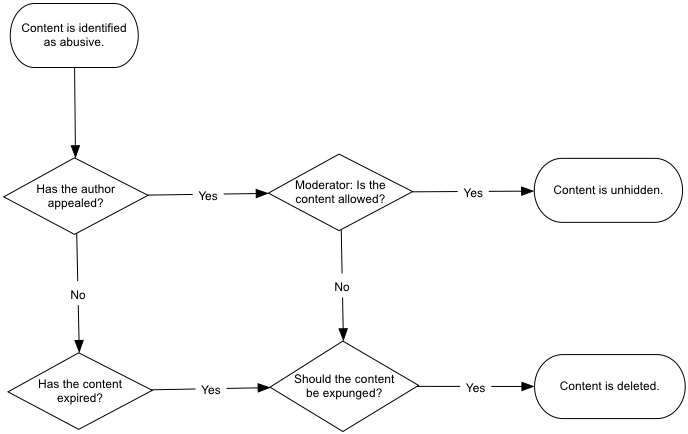

Abusive content follows this workflow:

When content enters the abuse workflow, it is hidden and the author is notified that the content is consider abusive. The notification includes a link to appeal that designation.

If the author chooses not to appeal, the content will expire and be flagged for expungement.

If the author appeals the abusive designation, the content with the appeal will show on the Awaiting Review tab of Administration > Moderation > Moderation Queue and moderators that can review the content will be notified that there is content to review.

The moderator can then review the content and appeal and either approve or deny the appeal. If the content is approved, it will be shown the community again. If the content is denied, the author is notified that the appeal has been denied and the content is flagged for expungement.

When content is flagged for expungement, it waits for a configurable period of time and is then deleted completely from the community.

Moderation and Abuse Configuration

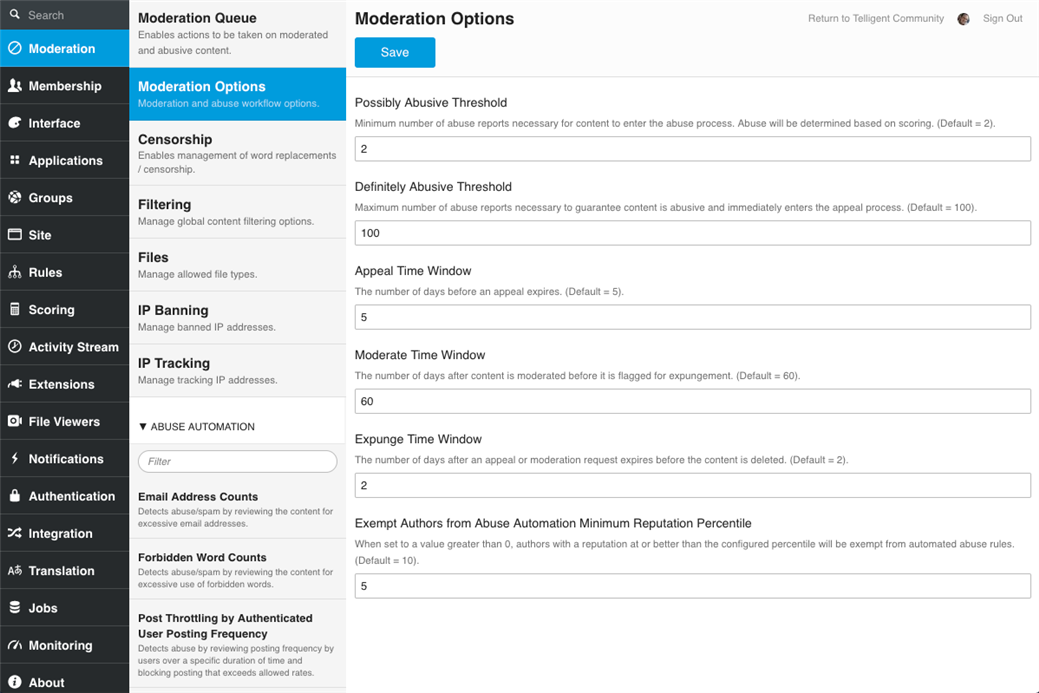

In addition to the configuration of abuse automation rules, the Administration > Moderation > Moderation Options panel includes additional configuration options that govern the moderation and abuse workflows:

Here, you can specify:

- Possibly Abusive Threshold: The minimum number of abuse reports to be reviewed for potential abuse. Once content receives this minimum number of votes, the reputation scores of the users who flagged the content is considered when determining if the content enters the appeal process. Note that if a member who can moderate the content flags content as abusive, it is immediately identified as abusive and is not subject to this minimum.

- Definitely Abusive Threshold: The maximum number of abuse reports allowed for a piece of content before it is always flagged as abusive. If any piece of content has this many votes, it is always identified as abusive regardless of the reputation of the reporters and author.

- Appeal Time Window: The number of days an author has to appeal abusive content before it is expunged (scheduled for deletion).

- Moderate Time Window: The number of days a moderator has to review moderated content before it is expunged (scheduled for deletion).

- Expunge Time Window: The number of days that expunged content waits before it is deleted.

- Exempt Authors from Abuse Automation Minimum Reputation Percentile: The minimum top percentile that is exempt from abuse automation processing. If a member has a reputation in the top 5%, for example (based on the screenshot above), their content would not be reviewed by abuse automation. Authors who can review abuse where the content is created as also exempt from abuse automation.

If any changes are made, be sure to click Save to commit the changes.

Reviewing Content in the Abuse and Moderation Workflow

The Moderation Queue (in Administration > Moderation) can be used to review content that is not yet identified as abusive (but has abuse reports) and content anywhere within the abuse process.

To view content that has been flagged as abusive but has not yet been determined to be reasonably abusive, review the Possibly Abusive tab. Here, you can proactively ignore or deny content before it formally makes it into the abuse workflow.

To review content that is elsewhere in the abuse workflow, view the In Process tab. Here, you can review content based on its current status within the workflow and filter to a specific group, application, or user. This tab can also be used to correct an error or review abusive content that has not yet been appealed.