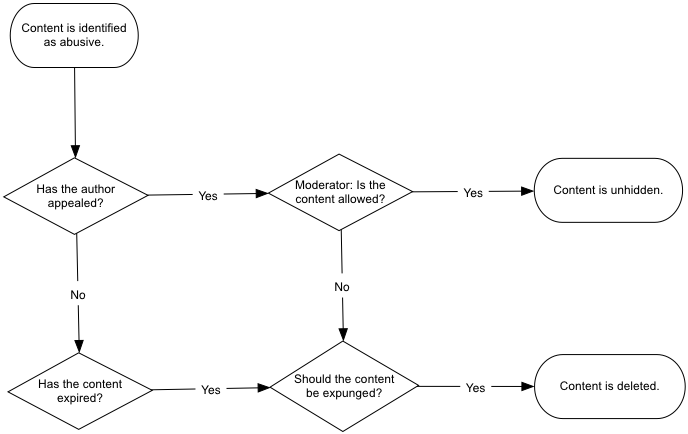

According to the abuse and moderation workflow:

How does moderation and abuse work?

When content is reported for moderation, and reported as abusive, and not appealed, it should enter the workflow to eventually be expunged.

I was under the impression this should be the case for user profiles / member profiles. The user account is reported as abusive, it enters the workflow, and eventually it's expunged and deleted, right?

This seems like a great idea because it gives any spam automation a "cool down", for however long it takes for this to be expunged, and unlikely to be able to re-register with the same details.

Except, I'm looking through the member accounts for our v12 Verint Community, and there are spam accounts that are marked as abusive, and they're only marked as Disapproved, and they are still there, and not expunged.

What can I do about this? Are user profiles not considered "content" to expunge? Is there an automation or script I should be running to make sure that these accounts are deleted and so are their content ?

Ideally I want to be able to identify a user profile as abusive, enter the workflow, and their account and content is expunged.

Does it not work this way? Is there something missing in the logic? Do we have to go through, report all of their content individually as abusive, and then manually delete their accounts? I really hope not.

This is important because we still have their accounts on the site, and their spam content on the site, and usually spam in their user profiles too.

My two main questions are:

1. How is this supposed to work? If it's supposed to work as I laid out, why isn't it?

2. How do I mass clean out these disapproved accounts and their content in a "clean" manner that doesn't result in a broken site and broken comments, and broken content and broken links?